Bogdana RakovaBogdana is a data scientist at the Responsible AI team at Accenture, research fellow at Partnership on AI and board of directors member of the Happiness Alliance. She was lead contributor to the IEEE 7010 Recommended Practice for Assessing the Impact of Autonomous and Intelligent Systems on Human Well-Being. She was involved in the Assembly: Ethics and Governance of AI program in 2018, a collaboration between the Berkman Klein Center for Internet and Society at Harvard Law School and the MIT Media Lab, a research engineer at the Think Tank Team innovation lab at Samsung Research, a student and later a teaching fellow at Singularity University, also a startup co-founder in the intersection of AI, Future of Work, and Manufacturing.

|

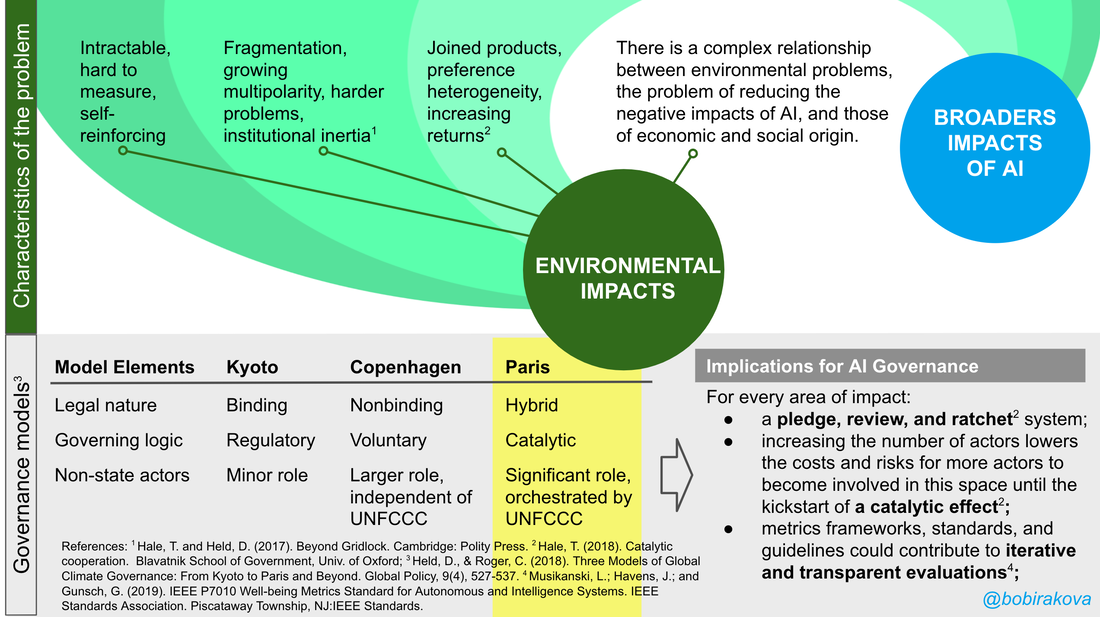

The future recognizes that social and ecological justice are inseparable. Well-being measurement frameworks empower social and environmental change through response-able systems that enable participation and inclusion facilitated by data and AI governance frameworks.

I embrace a radical speculative futures framing where well-being impact assessments have become widely accepted and adopted as the industry standard for research and development of AI-based systems. The measurement and consideration of well-being is embraced and incentivized by governments, corporations, academia, civil society, and others as a core aspect of their work. The broad definition of well-being allows for practitioners and researchers to account for and protect the multidimensional aspects of human agency and identity in the repeated interactions between people and AI systems. Well-being indicator frameworks allow for the consideration of future people while also acknowledging the past legacy of existing power structures.

Practically, AI well-being impact assessments provide (1) a means of measuring and assessing the impacts of AI on community well-being, (2) the engagement of communities in the development, deployment and management of AI, and (3) the creation of AI that improves community well-being and safeguards communities from social, ecological, economic and other threats such as climate change, dismantling of democracy, destabilizing economic inequality, and decimation of natural resources.

I embrace a radical speculative futures framing where well-being impact assessments have become widely accepted and adopted as the industry standard for research and development of AI-based systems. The measurement and consideration of well-being is embraced and incentivized by governments, corporations, academia, civil society, and others as a core aspect of their work. The broad definition of well-being allows for practitioners and researchers to account for and protect the multidimensional aspects of human agency and identity in the repeated interactions between people and AI systems. Well-being indicator frameworks allow for the consideration of future people while also acknowledging the past legacy of existing power structures.

Practically, AI well-being impact assessments provide (1) a means of measuring and assessing the impacts of AI on community well-being, (2) the engagement of communities in the development, deployment and management of AI, and (3) the creation of AI that improves community well-being and safeguards communities from social, ecological, economic and other threats such as climate change, dismantling of democracy, destabilizing economic inequality, and decimation of natural resources.